Enterprise Retail Data Pipeline: Hybrid Cloud Sync Operations

High-throughput data engineering pipeline orchestrating hybrid cloud synchronization between Azure and GCP for a major European retailer.

Project Overview

The Challenge

The retailer struggled with fragmented data silos across Azure and disparate operational databases, causing 24H+ latency in pricing analytics.

Legacy ingestion scripts were brittle, failing silently and requiring manual intervention for data repairs.

No centralized schema governance existed, leading to 'data swamps' where analyst queries frequently broke due to upstream changes.

Infrastructure scaling was manual, unable to cope with holiday season traffic spikes.

Engineered a critical data synchronization pipeline to bridge legacy Azure infrastructure with a modern Google Cloud Platform (GCP) data lake for a Fortune 500 equivalent retailer. The system ensures 99.9% data availability for pricing and inventory analytics.

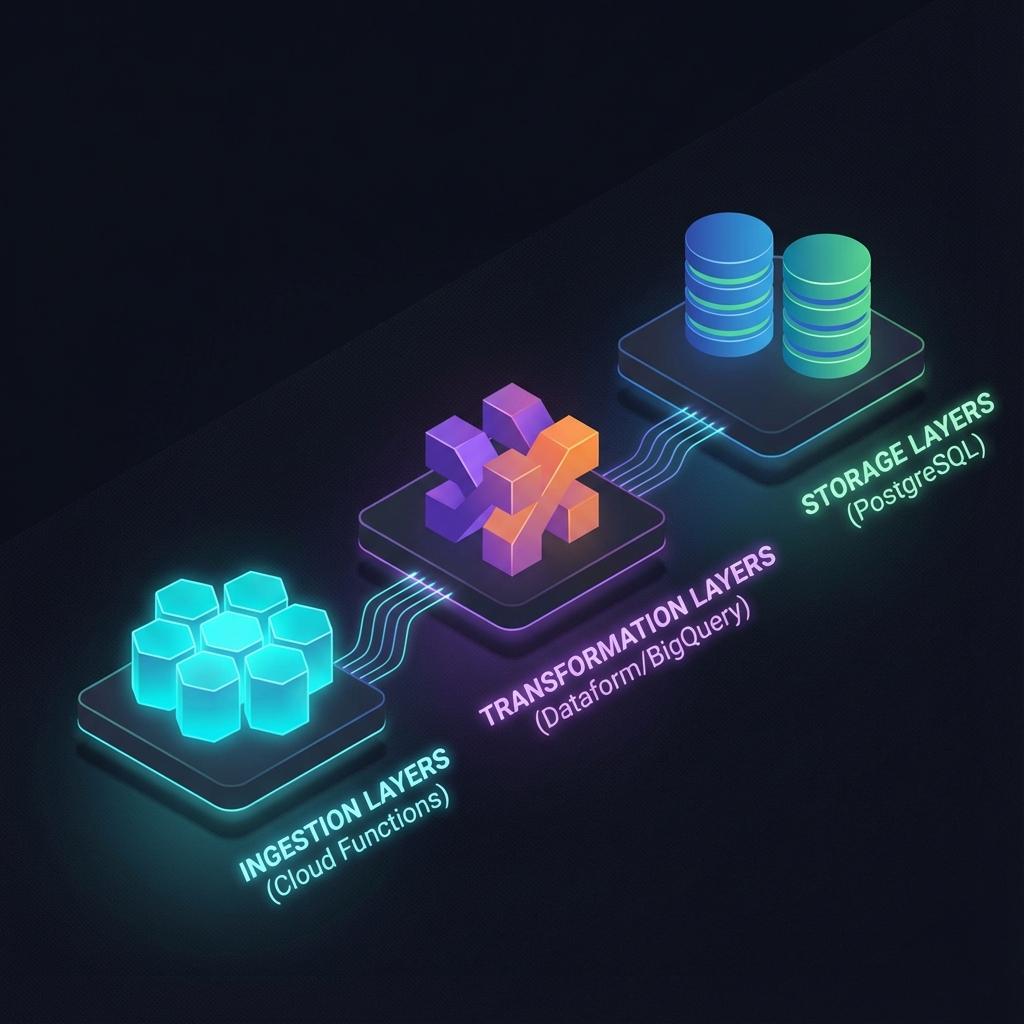

Architected a serverless ingestion layer using Google Cloud Functions (Python) to sanitize and transfer TB-scale datasets. Implemented complex SQL transformation logic using Dataform within BigQuery to standardize data schemas across the organization.

Built self-healing infrastructure automation with GKE pod management and comprehensive CI/CD pipelines to ensure zero operational downtime during scaling events. This project enabled the transition to real-time pricing strategies.

Technical Architecture

Ingestion Layer: Python-based Cloud Functions triggered by storage events to process data from Azure inputs.

Storage & Transformation: BigQuery serves as the Data Lakehouse, with Dataform managing complex SQL transformation pipelines and dependency graphs.

Operational Sync: Metadata and operational state synchronized with PostgreSQL for real-time application access.

Self-Healing Ops: Automated functions continually monitor and reset GKE pods and infrastructure components to maintain high availability.

Key Challenges & Solutions

Cross-Cloud Data Consistency

Solved data reliability issues during transfer between Azure and GCP by implementing atomic write operations and checksum validations in Cloud Functions.

Managing Complex SQL Dependencies

Migrated ad-hoc script transformations to Dataform, creating a dependency-aware execution architecture that prevents downstream failures when upstream data is missing.

Infrastructure Reliability at Scale

Developed 'Reset GKE Pods' automation functions to detect and resolve stuck operational pods without human intervention, reducing on-call alerts by 60%.

Impact & Results

Modernized key pricing data pipelines for a major retailer, enabling same-day analytics

Automated TB-scale daily data ingestion with 99.9% reliability

Established Dataform as the standard for 500+ SQL transformations ensuring code reuse

Significantly reduced maintenance overhead via self-healing infrastructure automation

Key Features

- Hybrid Cloud Data Sync (Azure to GCP) via Cloud Functions

- Serverless Data Ingestion pipeline (Python)

- SQL-based transformations using Dataform (BigQuery)

- Self-healing infrastructure (GKE Pod Reset automation)

- Automated Data Quality Assertions/Testing

- Secure credential management via Secret Manager

- CI/CD with automated testing and deployment

- PostgreSQL operational database synchronization

- Scalable execution handling TB+ daily data loads

Technologies Used

Project Gallery

Project Details

Client

Mercio

Timeline

2024 - Present

Role

Software Engineer (Data Engineering)

© 2026 Firas Jday. All rights reserved.